Serverless Architecture: Should You Make the Switch?

In today’s fast-evolving tech landscape, businesses and developers are constantly seeking ways to optimize performance, reduce costs, and scale applications seamlessly. One such innovation that has gained significant traction is serverless architecture.

But what exactly is serverless computing? Is it truly “serverless”? And most importantly—should you make the switch?

This article provides a deep dive into serverless computing, exploring its benefits, drawbacks, and real-world use cases to help you decide if it’s the right fit for your next project.

What Is Serverless Architecture?

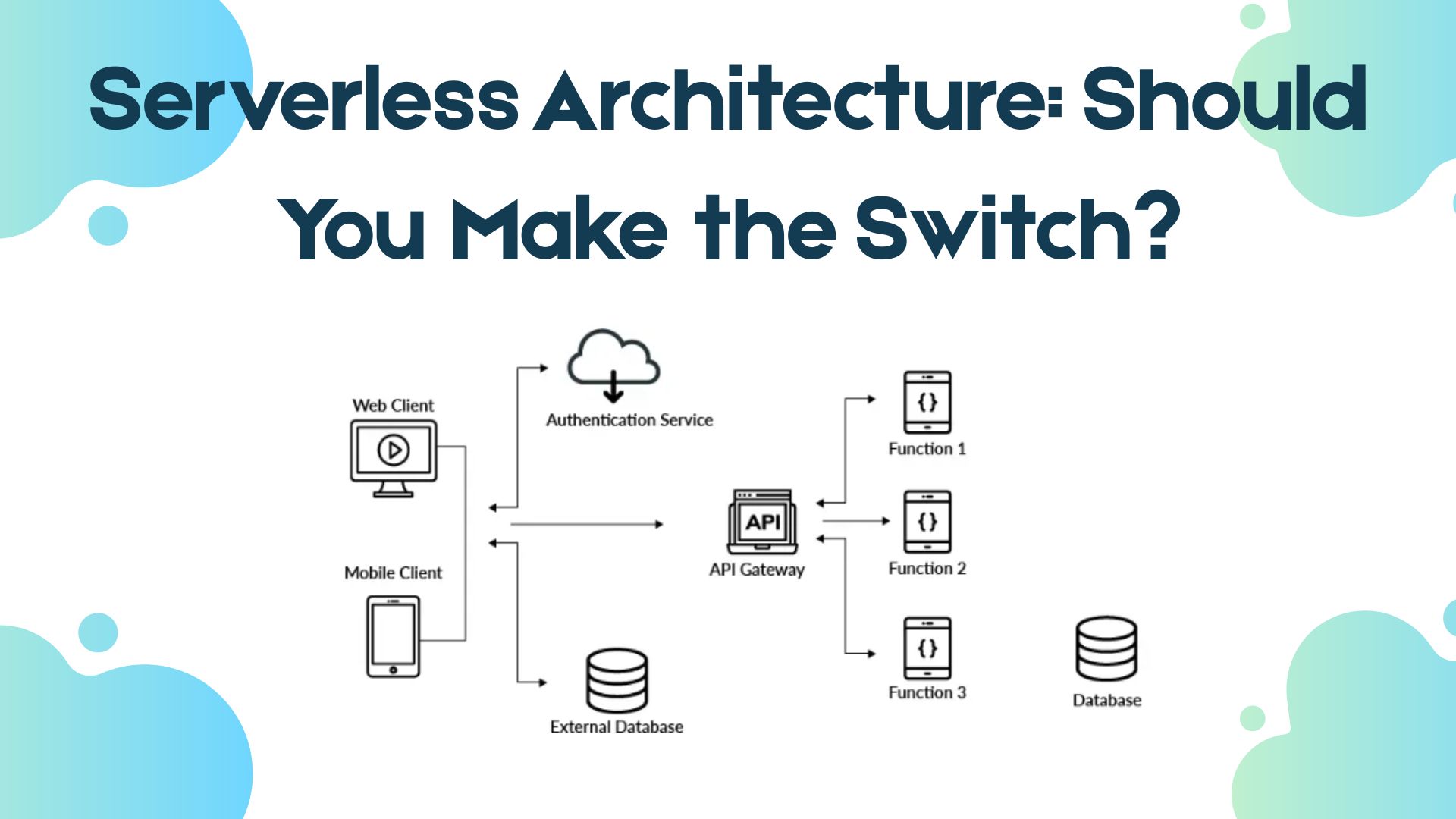

Despite its name, serverless computing does not mean there are no servers involved. Instead, it refers to a cloud computing model where the cloud provider (like AWS, Azure, or Google Cloud) dynamically manages the allocation and provisioning of servers.

Developers focus solely on writing and deploying code without worrying about infrastructure management—such as server maintenance, scaling, or capacity planning.

Key Characteristics of Serverless Computing

- Event-Driven Execution – Functions run only when triggered by events (e.g., HTTP requests, database changes, file uploads).

- Automatic Scaling – Resources scale up or down based on demand, with no manual intervention.

- Pay-Per-Use Pricing – You only pay for the compute time consumed, not idle server time.

- Stateless Functions – Each function execution is independent, with no persistent memory between runs.

How It Works: A Simple Example

Imagine you have an e-commerce app where users upload product images. Instead of running a dedicated server to process image thumbnails, you deploy a serverless function (e.g., AWS Lambda) that triggers whenever a new image is uploaded to cloud storage.

- Trigger: User uploads an image to Amazon S3.

- Execution: AWS Lambda automatically processes the image (resizing, compression).

- Result: The processed thumbnail is saved back to storage, and the function shuts down until the next upload.

This eliminates the need for a constantly running server, reducing costs and operational overhead.

Pros of Serverless Architecture

1. Reduced Operational Overhead

- No need to manage servers, OS updates, or infrastructure.

- Cloud providers handle scaling, security patches, and availability.

Example: A startup launching an MVP can focus on coding rather than setting up and maintaining servers.

2. Cost Efficiency

- Pay only for the milliseconds your code runs.

- No costs for idle servers (unlike traditional VMs or containers).

Example: A weather API that gets heavy traffic during storms but minimal usage otherwise would save significantly with serverless.

3. Automatic & Instant Scalability

- Functions scale from zero to thousands of concurrent executions seamlessly.

- No need to pre-provision servers for traffic spikes.

Example: A video streaming service experiencing viral demand won’t crash—Lambda or Cloud Functions will scale automatically.

4. Faster Time-to-Market

- Developers deploy code without infrastructure delays.

- Great for rapid prototyping and microservices.

Example: A company building a chatbot can deploy individual functions for NLP, authentication, and database queries independently.

5. Built-in High Availability & Fault Tolerance

- Cloud providers distribute functions across multiple availability zones.

- Failures in one zone don’t disrupt service.

Cons of Serverless Architecture

1. Cold Start Latency

- When a function hasn’t been used recently, it may take extra time (a few hundred ms to seconds) to initialize.

- Not ideal for real-time applications requiring sub-millisecond responses.

Mitigation: Keep functions warm with scheduled pings or use provisioned concurrency (AWS).

2. Vendor Lock-In

- Each cloud provider has its own serverless ecosystem (AWS Lambda, Azure Functions, Google Cloud Functions).

- Migrating between providers can be challenging.

Mitigation: Use frameworks like Serverless Framework or Terraform for multi-cloud deployments.

3. Debugging & Monitoring Complexity

- Distributed tracing is harder since functions are ephemeral.

- Traditional logging tools may not suffice.

Solution: Use AWS X-Ray, Datadog, or New Relic for observability.

4. Limited Execution Time & Resource Constraints

- Most providers impose time limits (e.g., AWS Lambda: 15 minutes max per execution).

- Not suitable for long-running processes (e.g., video encoding, batch processing).

Workaround: Break tasks into smaller functions or use containers (AWS Fargate).

5. Higher Costs at Scale

- For high-traffic applications, pay-per-execution can become expensive compared to reserved instances.

Example: A social media app with millions of daily active users might find serverless pricing less economical than Kubernetes.

When Should You Go Serverless?

Best Use Cases

Event-Driven Applications (e.g., file processing, IoT data handling)

APIs & Microservices (RESTful endpoints, webhooks)

Scheduled Tasks (Cron jobs, automated backups)

Low/Spiky Traffic Apps (Startups, seasonal services)

When to Avoid Serverless

Long-Running Processes (Big data processing, video rendering)

High-Performance Computing (Low-latency trading apps)

Monolithic Applications (Hard to decompose into functions)

Final Verdict: Should You Switch?

Serverless architecture is a game-changer for:

Startups & small teams wanting to minimize DevOps.

Event-driven & scalable apps needing cost-efficient solutions.

Developers who prefer focusing on code rather than infrastructure.

However, traditional servers or containers (like Kubernetes) may still be better for:

- Predictable, high-traffic workloads.

- Long-running processes.

- Applications requiring fine-grained control over infrastructure.

Next Steps

If you’re considering serverless:

- Experiment with a small project (e.g., an API or automation script).

- Monitor costs and performance to see if it fits your needs.

- Evaluate vendor lock-in risks before full-scale adoption.

Conclusion

Serverless computing simplifies development, reduces costs, and enhances scalability—but it’s not a one-size-fits-all solution. By weighing its pros and cons, you can determine whether it aligns with your project’s needs.

For rapid, scalable, and cost-effective deployments, serverless is a compelling choice. However, traditional architectures still hold value for certain use cases.

Will you make the switch? The answer depends on your workload, budget, and long-term goals.